Flow is a popular word in the machine learning lexicon. It signifies the concept of a workflow, a critical piece of software in the ML stack that orchestrates jobs, pushing each one through a sequence of steps in a defined end-to-end process. MFlow, Argo Workflow, and Kubeflow are three open-source Kubernetes-native tools that do just that.

They were built on top of Kubernetes and are therefore able to take advantage of all the Kubernetes features. And Kubernetes is turning into the de-facto container orchestration standard for machine learning if it isn’t already.

Other popular open-source workflow engines are Luigi and Airflow. However, they’re not Kubernetes-native and by default, not ML friendly.

- Apache Airflow: Developed at Airbnb. Initial release June 2015. Has 23k stars on GitHub.

- Luigi: Developed by Spotify: Initial release in 2011. Has 15k stars on GitHub.

MLflow

MLflow, developed by Databricks, is more than just a workflow tool, it is a platform with a comprehensive set of features that does much more. It manages the entire ML lifecycle, similar to Uber’s Michelangelo. Michelangelo is a revolutionary and proprietary platform that drives all ML innovations within Uber, managing the entire end-to-end process, from data preparation to training to deployment to monitoring, and so on. Another tool in this domain is Facebook’s FBLearner Flow.

MLflow supports several frameworks and tools. At the most basic level, it eases the process of experimentation and deployment at scale. One of the most powerful features is reproducibility, in that the same data set can be fed into multiple frameworks multiple times and the results will be the same.

One interesting tidbit, Facebook noted that the “largest improvements in accuracy came from quick experiments, feature engineering, and model tuning rather than applying fundamentally different algorithms.” The four components of MLflow are as follows:

- MLflow Tracking: Record, query, and track experiments

- MLflow Projects: Package code and reproduce on any system

- MLflow Models: Deploy models on any platform

- Model Registry: Stores, annotates, discovers, and manages ML models in a centralized repository

Argo Workflow

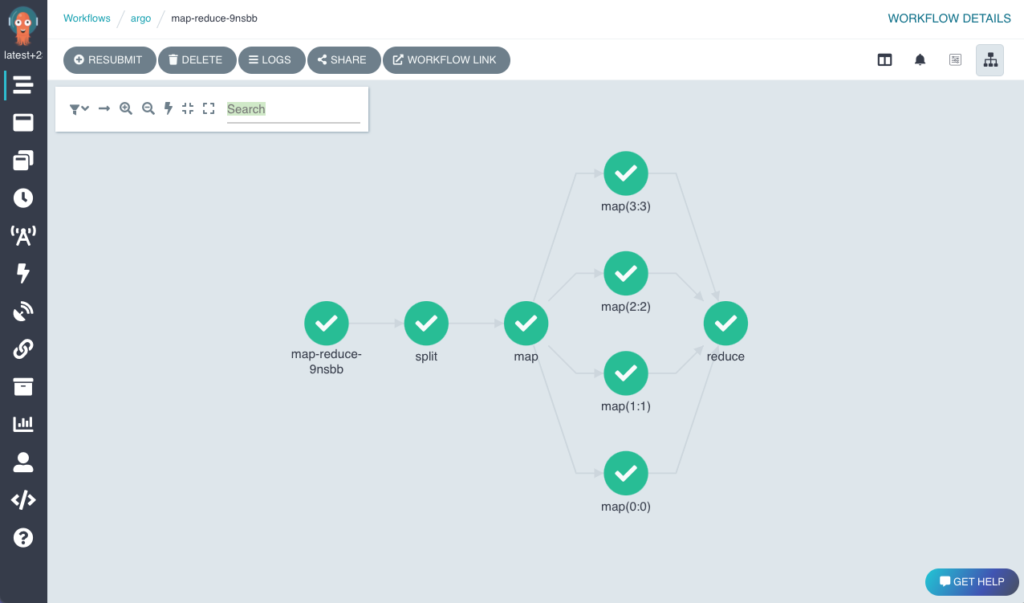

Argo Workflow is one of the most popular open-source Kubernetes-native workflow engines (9k stars on Github). Each step is defined within a container and it works as a directed acyclic graph (DAG) where “information must travel between vertices in a specific direction (forward)” but can’t travel back. The product is used by many, from startup to Fortune 500, and it’s highly scaleable, where it can run thousands of workflows concurrently. Argo Workflows is used for:

- ML pipelines but not specific to ML

- Extract, transform, and load (ETL)

- CI/CD and automation

- Data processing and batch

The other Argo Products include:

- Argo CD: GitOps tool for continuous delivery on Kubernetes

- Argo Rollouts: Controller that provides blue-green and canary deployment, and canary analysis

- Argo Events: Event-driven tool that helps trigger container objects, Argo workflows, and serverless workloads from different sources like S3, schedules, pubsub, etc.

Kubeflow

Google developed Kubeflow is an open-source Kubernetes-native platform for creating workflows, training models, and deploying them. It was designed specifically for machine learning. One of the important features is Kubeflow Pipelines which was developed from Argo Workflow.

Kubeflow integrates with Chainer, XGBoost, MXNet, PyTorch, Istio, and several other tools. The goal of this product is to simplify the process of orchestrating, training, and deploying ML models. It’s a critical component to Google’s portfolio of ML services as well as many other startups. Kubeflow features include:

- Makes experimentation

- Scale experimentation and model deployments to thousands

- Supports AutoML

- Re-usable ML workflows

- End-to-end orchestration

- Support for a wide array of open source products

- Hard work is done behind the scenes

In summary, open-source Kubernetes-native workflow engines are playing an important part in simplifying the end-to-end process. This type of work falls under the MLOps umbrella, which marries machine learning with DevOps. Using ML models should not be confined to the domain of data scientist, but open to DevOps engineers that have experience working with data.