Businesses that use traditional IT applications are accelerating the transition to cloud platforms and, consequently, the increase in the use of technologies such as containers and Kubernetes (K8s) for automatically packaging and deploying software applications.

As the use of Kubernetes continues to grow in the cloud computing era, integrating workflow systems with it has become more popular.

Workflow systems allow developers to define, execute, and manage workflows using containerized applications and orchestration systems such as Kubernetes. Workflow systems use scheduling algorithms to define the order in which tasks are performed in a workflow. These algorithms frequently are optimization methods for mapping tasks and resources to improve efficiency and performance.[1]

For example, some open-source workflow engines are Pegasus, Galaxy, BioDepot, Nextflow, Pachyderm, Luigi, SciPipe, Kubeflow, MLflow, Apache Airflow, Prefect, and Argo. Many of them are container-based and require the existence of the K8s cluster in cloud environments.

Argo Workflows System

Argo is an ongoing development project started by Applatix (later acquired by Intuit), a company that provided cloud-based solutions for automating and optimizing workflows. Its modules include Argo Workflows, Argo Rollouts, and Argo CD for managing and deploying applications on Kubernetes.

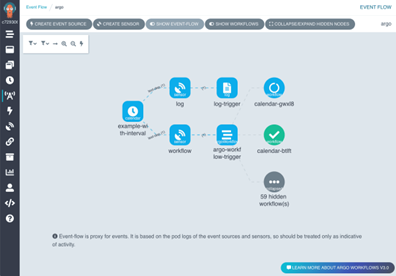

Argo Workflows is an open-source container-native workflow engine for automating complex multi-step processes on Kubernetes, running tasks in a workflow as a separate Kubernetes pod. Argo is designed to be easy to use, flexible, and scalable, and it supports features such as dependencies, branching, and error handling.[2] As the most popular workflow execution engine for K8s, Argo is hosted by CNCF and implements workflow functions through CRD (Custom Resource Definition)[3], so you can run it on EKS, GKE, or any other K8s implementation.

Specific use cases include:[2]

- CI/CD Pipelines

Argo modules allow users to implement Continuous Integration/Continuous Deployment (CI/CD) for data pipelines on Kubernetes. It also allows users to define workflows, including tasks such as building container images and deploying applications to a K8s cluster. You can find some code examples in its documentation.

- ETL (Extract, Transform and Load)

Argo Workflows allows extracting data from multiple databases (v2.4.2 supports two databases. Postgres and MySQL), transforming, and loading it to a destination. In other words, Argo can connect to a database, run SQL queries to extract the data, and save it to a file. Once the data has been extracted, you can use, for example, Apache Spark to process the data and Argo Workflows to immediately load the data to the destination and automate the process.

- Infrastructure Automation

Devs can employ Argo Workflows to automate the process of managing infrastructure. A use case is InsideBoard, a SaaS company that aims to automate everything from the infrastructure to the product. Argo Workflows automates the delivery of dedicated cloud resources for all its customers.

- Machine Learning

Users can employ Argo Workflows to automate ML models’ training, deploying, and updating processes by defining a workflow that includes tasks for data preparation, model training, evaluation, and retraining. For example, Arthur.ai employs Argo Workflows to accelerate operations and optimize ML models accuracy.

Quick Start Guide

The implementation process for Argo Workflows will depend on your specific needs and requirements, and the environment in which you are deploying it. To install, follow the instructions indicated in its Quick Start Guide.

Select the desired release, and install Argo Workflows according to the example below.

kubectl create namespace argo kubectl apply -n argo -f https://github.com/argoproj/argo-workflows/releases/download/v<<ARGO_WORKFLOWS_VERSION>>/install.yaml

Now, you have set up the Argo server, Port-forward, and a few other resources used by Argo.

You can start creating your workflows using a declarative YAML file, specifying the workflow’s steps and any dependencies or conditions. As you run your workflows, you can use the Argo Workflows UI or the Kubernetes API to monitor their progress and troubleshoot any issues that arise.

Who Uses Argo Workflows?

Many large organizations and individuals use Argo, being over 170, to automate processes on Kubernetes. Some examples of organizations include Alibaba Cloud, Google, Cisco, NVIDIA, Red Hat, and IBM.

Releases in the last months

Argo community releases new minor versions approximately every three months.[2] Some fixes of the last releases are:

- v3.4.4 (2022-11-28): Upgrade kubectl to v1.24.8 to fix vulnerabilities.

- v3.4.3 (2022-10-30): Mutex is not initialized when controller restart.

- v3.4.2 (2022-10-22): Support Kubernetes v1.24.

- v3.4.1 (2022-09-30): Improve semaphore concurrency performance.

- v3.4.0 (2022-09-18): SDK workflow file.

References

[1] Shan, C., Wang, G., Xia, Y., Zhan, Y., & Zhang, J. (2022). KubeAdaptor: A Docking Framework for Workflow Containerization on Kubernetes. arXiv preprint arXiv:2207.01222.

[2] Argo, Argo workflows – github, 2021. URL: https://github.com/ argoproj/argo

[3] Shan, C., Wang, G., Xia, Y., Zhan, Y., & Zhang, J. (2021, December). Containerized Workflow Builder for Kubernetes. In 2021 IEEE 23rd Int Conf on High Performance Computing & Communications; 7th Int Conf on Data Science & Systems; 19th Int Conf on Smart City; 7th Int Conf on Dependability in Sensor, Cloud & Big Data Systems & Application (HPCC/DSS/SmartCity/DependSys) (pp. 685-692). IEEE.