PySpark

PySpark is a Python library that provides an interface to Apache Spark, a speed-oriented, general-purpose cluster computing system. It was initially developed at the University of California and later donated to the Apache Software Foundation.

It contains a wide range of APIs to provide advanced features, including Spark SQL for SQL and DataFrames, Pandas API on Spark for Pandas workloads, MLlib for machine learning (ML), GraphX for graph processing, and Structured Streaming for stream processing.

In addition, PySpark provides an API called RDDs (Resilient Distributed Datasets), which allows users to create distributed collections of objects to process data in parallel across multiple nodes.

PySpark enables users to process and analyze datasets using Python, Scala, Java, R, and other programming languages. The Spark environment includes a user-friendly shell and a web-based UI for interactive data exploration in distributed environments.

Components

Spark SQL and DataFrame

Spark SQL and DataFrame are two powerful modules of the Apache Spark ecosystem that enable users to process and analyze data using Structured Query Language (SQL) and data frames, respectively.

With Spark SQL, users can directly execute SQL queries on structured data from Spark without using a separate database or specialized query language. On the other hand, DataFrames provide a programming interface to work with structured data in a distributed computing environment.

One of the key advantages of Spark SQL and DataFrame is that they allow users to integrate SQL queries and data frame operations with other Spark modules such as MLlib, GraphX, and Streaming.

Streaming

The Streaming module in Apache Spark enables users to perform real-time data processing and analysis. It is a fault-tolerant streaming processing system that supports batch and streaming workloads.

Spark Streaming uses a micro-batch processing model, where incoming data streams are divided into small batches and processed using the Spark engine. This approach allows Spark Streaming to provide near-real-time streaming data processing with low latency and fault tolerance, achieving latencies of approximately 100 milliseconds. Since version 2.3, Apache Spark has included a processing mode called Continuous Processing, which can achieve latencies of 1 millisecond.

MLlib

MLlib is an ML library built on top of Apache Spark, designed to support a wide range of supervised and unsupervised learning algorithms. With its rich set of algorithms and utilities, MLlib is a valuable tool for building robust and scalable ML applications.

This library includes algorithms to perform generalized linear regression, survival regression, decision trees, random forests, gradient-boosted trees, k-means clustering, and Gaussian mixture models. It also provides a variety of utilities to support ML workflows, such as model evaluation, hyper-parameter tuning, standardization, and normalization.

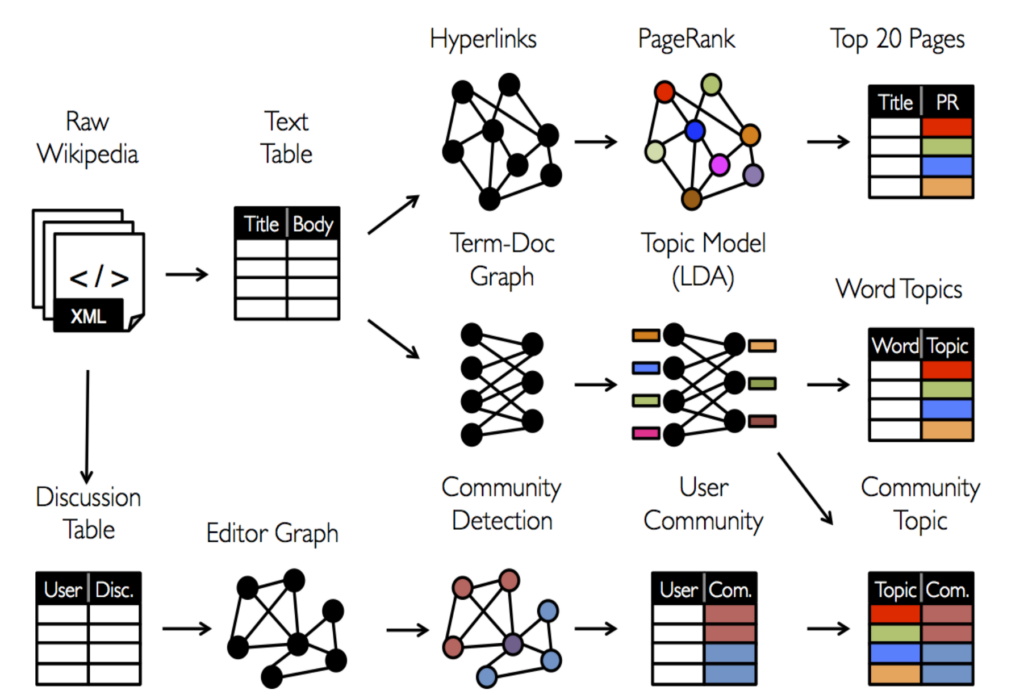

GraphX

GraphX is a distributed graph processing framework that facilitates analyzing and manipulating large amounts of data. Users can store and process their data by creating relationships among different pieces of large datasets. In this way, GraphX enables users to perform efficient data analysis tasks by providing graph computation capabilities for representing graph-structured data.

Graph-structured data can be represented using either a graph schema or an RDD (Resilient Distributed Dataset) containing vertices and edges. In this representation, vertices correspond to entity labels, and edges represent the relationships between entities.

PySpark vs. Pandas

Pandas is an open-source Python package to work with structured tabular data for analysis. It enables efficient operations on arrays, data frames, and series, making it a valuable tool for data analytics, machine learning and data science projects. This library can read and manipulate various data types, including CSV, JSON, SQL, and many other formats. When Pandas reads data from these sources, it creates a DataFrame object (similar to an SQL table or spreadsheet), which is a two-dimensional tabular data structure consisting of rows and columns.

PySpark allows users to process and analyze large-scale data in a distributed and parallel manner. It provides a flexible data source API to access various data sources, including Hive, Avro, Parquet, ORC, JSON, and JDBC. Unlike Pandas, which runs operations on a single node, PySpark can distribute data processing across multiple nodes (different machines in a cluster), making it much faster and more scalable than Pandas.

These features make PySpark an ideal tool when working with larger datasets, mainly because PySpark improves computing processes many times faster than Pandas.

Quick Installation Guide

PySpark requires Java 8 or later to be installed. Make sure to install it first. After that, you can download Spark from the official website. Extract the Spark file to a directory of your choice and set the environment variables “JAVA_HOME” and “SPARK_HOME” to the Java and Spark installation directories, respectively.

Open the command prompt of your system and run the following command:

pip install pyspark

Once the installation is complete, you can verify it by running the following code in a Python IDE:

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName("test").getOrCreate()

spark.range(5).show()

That’s it! You have successfully installed PySpark on your machine.

Highlights

Project Background

- Project: PySpark

- Author: Matei Zaharia

- Initial Release: 2014

- Type: Data Analytics and Machine Learning Algorithm

- License: Apache Licence

- Contains: Spark SQL, DataFrame, Streaming, MLlib (Machine Learning), and Spark Core.

- Language: Python, Scala, Java, SQL, R, C#, F#

- GitHub: python/pyspark

- Runs On: Windows, Linux, MacOS

- Twitter: –

Main Features

- Distributed task dispatching, scheduling, and basic Input-Output functionalities

- Provides support for structured and semi-structured data

- Perform streaming analytics

- Distributed graph-processing framework

- Language Support

- Distributed processing using parallel processing

- Fault-tolerant

Prior Knowledge Requirements

- Users should understand basic programming concepts such as data structures, loops, functions, and methods.

- Users should have a basic understanding of Python programming language and its syntax.

- Users should be familiar with the basic concepts of Apache Spark, including its architecture, RDDs (Resilient Distributed Datasets), transformations, actions, and data sources.

- Users should understand big data technologies, including Hadoop, SQL (Structured Query Language), NoSQL databases, and distributed computing.

Projects and Organizations Using PySpark

- Apache Mahout: Previously on Hadoop MapReduce, Mahout has switched to using Spark as the backend.

- Apache MRQL: A query processing and optimization system for large-scale, distributed data analysis, built on top of Apache Hadoop, Hama, and Spark.

- IBM Spectrum Conductor: Cluster management software that integrates with Spark and modern computing frameworks.

- Delta Lake: Storage layer that provides ACID transactions and scalable metadata handling for Apache Spark workloads.

- MLflow: Open source platform to manage the ML lifecycle, including deploying models from diverse machine learning libraries on Apache Spark.

- Source: Third-Party Projects.

Community Benchmarks

- 35,100 Stars

- 26,600 Forks

- 1,880+ Code contributors

- 68+ releases

- Source: GitHub

Releases

- v3.3.2 (2-17-2023): Update and Fixes: e.g., Extend SparkSessionExtensions to inject rules into AQE Optimizer.

- v3.3.1 (10-25-2022): Update and Fixes: e.g., Support Customized Kubernetes Schedulers.

- v3.3.0 (6-16-2022): Update and Fixes: e.g., Support complex types for Parquet vectorized reader.

- v3.2.0 (10-13-2021): Update and Fixes: e.g., Extend Support push-based shuffle to improve shuffle efficiency.

- Source: Releases.