Seldon Core

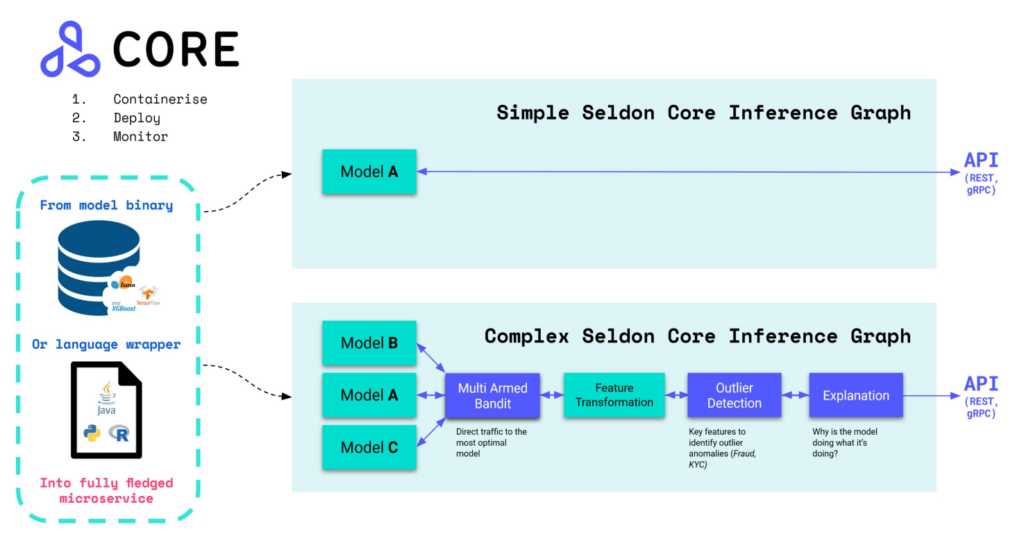

Seldon Core is a powerful open-source MLOps platform designed to help users easily deploy and scale Machine Learning (ML) models on a massive scale. Built to run on Kubernetes (K8s), Seldon Core offers seamless integration with a wide range of open-source tools for reporting, facilitating, and managing ML tasks.

The primary focus of Seldon Core is deployment. And it works with various frameworks and libraries such as PyTorch, TensorFlow, and scikit-learn. To use this product, the ML models must be containerized. It can be done with tools like Source-to-Image (S2I), which creates an image from the source code.

Seldon can scale the production of ML models through its wide range of features, such as Advanced Metrics, Request Logging, Explainers, Outlier Detectors, A/B Tests, Canaries, and more.

Components

Seldon Core is a platform that enables users to convert ML models into REST/GRPC microservices, whether implemented in different frameworks such as TensorFlow, PyTorch, and H2O or in programming languages such as Python and Java.

To achieve this goal, Seldon Core provides several components and services that include:

- Model Servers: It enables users to seamlessly deploy their model into the production environment. Users can choose to build between two types of servers.

- Reusable Model Servers: This refers to pre-built and tested servers that can be used across multiple projects, reducing deployment time and effort.

- Non-Reusable Model Servers: This refers to custom-built servers designed for a specific ML model and cannot be easily adapted for reuse in other projects. These models typically require more development time and effort than reusable model servers.

- Language Wrappers: It is a set of libraries that make it easier to containerize ML models using popular programming languages, such as Python, Java, Go, Nodejs, C++, and R.

- Seldon Deployment CRD: It allows the deployment of ML models to the Kubernetes cluster and handles some real production traffic by defining an inference graph through yaml files. With this manifest file, users can define what models must be deployed and how they are connected in the inference graph.

- Seldon Core Operator: It is a Kubernetes operator that controls the deployments of ML models. It enables users to take advantage of Kubernetes’ built-in features for managing containers and workloads.

Services

- Service Orchestrator: It is a component that manages the routing and execution of all stages of deployment on a Kubernetes cluster. It reads the inference graph structure, and when an inference request is received, it ensures that it is passed to each node of the graph in the right order.

- Metadata Provenance: It can be used to specify metadata for each of the components (nodes) in the inference graph. The service orchestrator engine will probe each component in the graph for its metadata and use this information to derive the global inputs and outputs of the entire model.

- Metrics with Prometheus: Prometheus is an open-source monitoring system that collects and stores metrics from various sources in a database. The service orchestrator exposes the core metrics that can be scraped by Prometheus.

- Distributed Tracing with Jaeger: Seldon uses Jaeger, an end-to-end distributed tracing, to monitor and obtain insights on latency and performance in a Seldon deployment.

Seldon Core vs. Main Tools for MLOps

The table below summarizes the tools for machine learning process management and execution.

| Seldon Core | BentoML | Kubeflow | MLflow | |

| License | Apache Software Foundation. | Apache Software Foundation. | Apache Software Foundation. | Apache Software Foundation. |

| Purpose | Deployment and monitoring. | Docker image build, ML deployment. | ML pipeline execution. | Experiment management. |

| Description | It is a platform that enables users to deploy ML models as production-ready REST/GRPC microservices. | It is a platform-agnostic framework for packaging and serving machine learning models. | It provides a platform with components to make ML workflow implementations on Kubernetes simple, portable, and scalable. | It is a workflow platform that manages the ML lifecycle, including experimentation, reproducibility, deployment, and a central model registry. |

| Supported Frameworks | Seldon Core supports frameworks such as TensorFlow, PyTorch, H2O, ONNX Runtime, XGBoost, NVIDIA Triton Inference, SKLearn, Tempo, MLFlow, and HuggingFace. | BentoML supports popular frameworks such as TensorFlow, PyTorch, scikit-learn, XGBoost, CatBoost, fast.ai, Keras, ONNX Runtime, and LightGBM. | Kubeflow supports a wide array of projects such as TensorFlow, PyTorch, Chainer, Apache MXNet, Ambassador, XGBoost, Istio, Nuclio, and more. | MLFlow supports several frameworks such as TensorFlow, PyTorch, scikit-learn, MPI, MXNet, XGBoost and Spark. |

| Environments | It runs on clusters, clouds, and on-premises that use Kubernetes. | It runs on any platform that supports Python. | It runs on clusters, clouds, and on-premises that use Kubernetes. | MLflow runs on various execution environments, including local machines, remote VMs, and containerized environments. |

| Components | Model Servers, Language Wrappers, Seldon Deployment CRD, Seldon Core Operator, Service Orchestrator, Metadata Provenance, Metrics with Prometheus and Distributed Tracing with Jaeger. | Packages: “.Service()”, “.build()”, “.Bento()”, “.Runner()”, “.Runnable()”, “.Tag()”, “.Model()”, and “YataiClient()”. | Kubeflow Notebooks, Kubeflow Pipelines, Katib, Training Operators, and Model Serving. | MLflow Tracking, MLflow Projects, MLflow Models, and Model Registry. |

Sources: Seldon Core, BentoML, Kubeflow, MLflow.

Quick Start Guide: Seldon Core

The official documentation provides a detailed step-by-step installation procedure. For a brief illustration, here we’ll describe the procedure needed for Kubernetes.

First, you must install Kubernetes and Helm; please refer to the pre-requisites for more information. If you don’t have Kubernetes installed already, you can follow the installation instructions provided by your cloud provider or use a local tool such as Minikube.

Before installing Seldon Core, you need to create a “namespace,” which is suggested as follows:

kubectl create namespace seldon-system

Once Kubernetes is installed, you can install the Seldon Core Operator using the command below (Istio method). The “–version” flag helps you to define a specific version.

helm install seldon-core seldon-core-operator \

--repo https://storage.googleapis.com/seldon-charts \

--set usageMetrics.enabled=true \

--set istio.enabled=true \

--namespace seldon-system

Highlights

Project Background

- Project: Seldon Core

- Author: Seldon.io

- Initial Release: January 2018

- Type: MLOps Platform

- License: Apache-2.0

- Language: Python, Go, JavaScript, C++, HTML

- GitHub:/SeldonIO

- Runs On: Kubernetes

- Twitter: SeldonIO

- YouTube: SeldonIO

Main Features

- It enables users to containerize ML models using pre-packaged servers and language wrappers.

- It is already tested and implemented on cloud providers, such as AWS EKS, Azure AKS, and Google GKE.

- It provides inference graphs to specify the deployment of an ML model as a microservice.

- It provides a variety of pre-built components, including Metadata Provenance, which enables the tracing of each model to its corresponding training system, data, and metrics.

- It supports integration with third-party applications such as Prometheus and Grafana.

Prior Knowledge Requirements

- Knowledge of ML algorithms, statistics, model training, evaluation, and deployment.

- Knowledge of Python programming language, as Seldon Core supports deployment models built with popular Python libraries such as TensorFlow, PyTorch, and Scikit-learn.

- Familiarity with cloud platforms to deploy ML models in a distributed or production environment.

- Workflow concepts, their structure, and how they can be used to automate and streamline processes.

- Familiarity with containerization technologies such as Docker and Kubernetes.

Projects and Organizations Using Seldon Core

- Red Hat: KFServing and Seldon were integrated into its OpenShift Kubernetes distribution, providing users with a cloud platform for easy deploying and scaling machine learning models.

- Covéa: “Seldon Deploy gave us the flexibility we needed to be able to manage the disparate policy data we had. Once models are deployed, we are now able to integrate our many data sets with explainability.” –Tom Clay, Chief Data Scientist.

- Exscientia: “Seldon has made huge difference to how we scale and deploy our inference Ecosystem.” –Sash Stasyk, MLOps Engineering Team Lead.

- Noitso: “The review process has gone from multiple days to just hours giving us a massive competitive advantage both for our existing customers and soon our potential customers.” –Thor Larsen, Data Scientist.

- Capital One: “With our Model as a Service’ platform (MaaS) running on Seldon, we’ve gone from it taking months to minutes to deploy or update models.” – Steve Evangelista, Director of Product Management.

- Source: Seldon customers

Community Benchmarks

- 3,600 Stars

- 733 Forks

- 161+ Code contributors

- Source: GitHub

Releases

- v2.2.0 (2-2023); Fix and Updates. E.g., fix typos in 2.2.0 testing.

- v2.1.0 (1-17-2023); Fix and Updates. E.g., use helm to install Core v2 via Ansible.

- v1.15.0 (12-6-2022); Fix and Updates. E.g., removing dependabot bot.

- v2.0.0 (12-2-2022); Fix and Updates. E.g., fix image build.

- v1.14.1 (8-18-2022); Fix and Updates. E.g., Adding prepackaged server separate pod instructions

- Source: Releases

References

[1] GitHub.

[2] SeldonIO.