Kubeflow

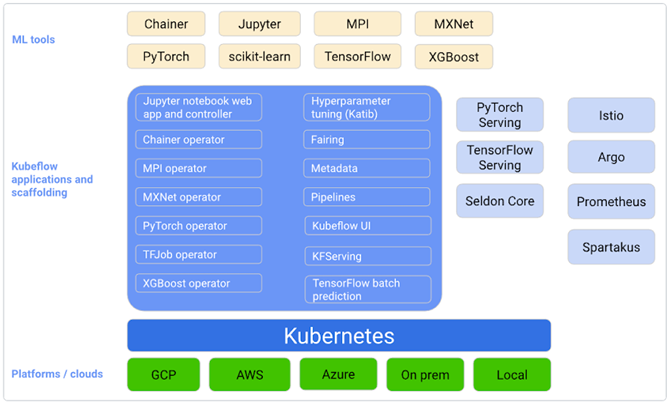

Kubeflow is a workflow engine that simplifies the deployment, management, and scaling of Machine Learning (ML) workloads by providing a platform that integrates with Kubernetes (K8s).

Initially, Google created it to manage TensorFlow tasks, but over time, it was expanded to support various cloud platforms and architectural frameworks. It also includes the easy creation of multi-stage distributed ML pipelines consisting of data preparation, training, tuning, serving, monitoring, and logging, all running within containers on a K8s cluster.

Kubeflow is a valuable open-source tool for data scientists and engineers who want to use Kubernetes for ML operations. Its main goal is to simplify the management of Kubernetes by providing components and integrating third-party tools.

Components

Notebooks

Kubeflow supports JupyterHub, a tool for integrating web-based IDEs within Kubernetes clusters, providing users with native support for popular IDEs such as Visual Studio Code, JupyterLab, and RStudio.

Pipelines

It is a tool for deploying and building end-to-end ML workflows and provides a user-friendly interface for managing pipelines. Kubeflow Pipelines is a scheduling engine for multi-step workflows that allows users to easily chain together different steps of an ML pipeline, such as data preprocessing, training, and serving. It also supports the use of Docker containers, which makes it easy to package and deploy in a portable and consistent way. As it was built on top of Argo Workflows, Pipelines users will need to choose a workflow executor.

Katib

Katib is a Kubernetes-based tool for Automated Machine Learning (AutoML) that includes features such as hyperparameter tuning, early stopping, and Neural Architecture Search (NAS). It is compatible with multiple frameworks and offers a wide range of AutoML algorithms, including Bayesian Optimization, Tree of Parzen Estimators, Random Search, Differentiable Architecture Search, and more. Currently, it is in the beta version.

Training Operators

Kubeflow Training Operators is a set of Kubernetes custom resources (TFJob, PaddleJob, PyTorchJob, and more) that provides a way to automate the deployment and scaling of ML training workflows.

Model Serving

Kubeflow offers two model service systems, KFServing and Seldon Core, each equipped with a comprehensive set of features and sub-features to meet the diverse needs of engineers. These features include support for different ML frameworks, graphing, analytics, scaling, custom servicing, and integration with Istio for universal traffic management, telemetry, and security for complex deployments.

ML Frameworks

Chainer

Chainer is a define-by-run deep learning neural network framework. It also supports multi-node distributed deep learning and deep reinforcement algorithms.[1]

Ksonnet

Ksonnet provides a simple way to create and edit Kubernetes configuration files. Kubeflow makes use of ksonnet to help manage deployments.[1]

PyTorch

PyTorch is a Python deep learning library developed by Facebook based on the Torch library for Lua, a programming language.[1]

TensorFlow

TensorFlow provides an ecosystem for large-scale deep-learning models. It includes distributed training using TFJob, serving with TF Serving, and other TensorFlow Extended components such as TensorFlow Model Analysis (TFMA) and TensorFlow Transform (TFT).[1]

MXNet

MXNet is a portable and scalable deep learning library using multiple frontend languages such as Python, Julia, MATLAB, and JavaScript.[1]

Kubeflow vs. Main Tools for MLOps

The table below summarizes the tools for machine learning process management and execution.

| Kubeflow | Argo Workflows | Luigi | MLflow | Airflow | |

| License | Apache Software Foundation | Apache Software Foundation | Apache Software Foundation | Apache Software Foundation | Apache Software Foundation |

| Purpose | ML pipeline execution | Run CI/CD ML pipelines natively on Kubernetes | ML pipeline execution | Experiment management | ML pipeline execution |

| Description | Kubeflow provides a platform with components to make ML workflow implementations on Kubernetes simple, portable, and scalable. | Argo Workflows is focused on providing a native Kubernetes-based workflow engine that allows users to create and manage complex and parallel workflows. | Luigi is a Python library for building complex pipelines of batch jobs. It is designed to handle dependencies between tasks and allow parallel execution of data processing and ML pipelines. | MLFlow is a workflow platform that manages the ML lifecycle, including experimentation, reproducibility, deployment, and a central model registry. | Airflow is a platform for developing, scheduling, and monitoring batch-oriented workflows. |

| Supported Frameworks | Kubeflow supports a wide array of projects like TensorFlow, PyTorch, Chainer, Apache MXNet, Ambassador, XGBoost, Istio, Nuclio, and more. | – | – | MLFlow supports several frameworks like TensorFlow, PyTorch, scikit-learn, MPI, MXNet, XGBoost and Spark. | – |

| Environments | It runs on clusters, clouds, and on-premises that use Kubernetes. | It runs on clusters, clouds, and on-premises that use Kubernetes. | Anywhere Python is installed. | MLflow runs on various execution environments, including local machines, remote VMs, and containerized environments. | Workflows are defined in Python code. |

| Components | Kubeflow Notebooks, Kubeflow Pipelines, Katib, Training Operators, and Model Serving. | Argo Project modules include Argo Workflows, Argo Rollouts, and Argo CD. | Packages: ”.configuration()”, “.contrib()”, “.tools()”, “.batch_notifier()”, “.cmdline()”, “.cmdline_parser()”, and more. | MLflow Tracking, MLflow Projects, MLflow Models, and Model Registry. | Webserver, Scheduler, Database, Executor, Worker, Triggerer, DAGs, XComs. |

Sources: Kubeflow , Argo Workflows, MLFlow, Apache Airflow, Luigi.

Quick Start Guide: Kubeflow Pipeline

Getting started with Kubeflow can involve a few different steps, depending on your specific use case and environment. Here is an overview of steps you might take:

- Create a Kubernetes Cluster: Kubeflow allows the use of cloud services such as GKE, AKS, IBM could, or AWS. We suggest following this guide to ensure the cluster has the proper setup to install it.

- Installing and Deploying Kubeflow: There are a few different ways to deploy Kubeflow, but one of the easiest is to use the kfctl command line, a control plane for deploying and managing Kubeflow.

- Access the Kubeflow Dashboard: Users can access the Kubeflow Dashboard by running the kubectl command and accessing the Kubernetes API. The dashboard provides a user-friendly interface for managing and monitoring your Kubeflow cluster.

- Create and Run an ML Pipeline: Kubeflow allows the creation of ML pipelines by using the UI or the Pipelines SDK to create pipelines programmatically.

- Monitor and troubleshoot: The dashboard provides a variety of tools for monitoring pipeline runs, including log viewers and metrics dashboards.

- Integrate with other tools: Kubeflow can integrate with ML tools such as Jupyter, TensorFlow, and Seldon, enabling users to develop and test ML models.

Note: keep in mind that this guide is a high-level overview of a complex process, and the exact steps may vary depending on your specific use case and environment.

Kubeflow Use Cases

Understanding specific use cases is crucial as it highlights Kubeflow’s practical applications and justifies the added complexity of managing it. Some ways in which users can utilize Kubeflow and its features are:

- Training and serving ML models in different environments like cloud, on-premise, or hybrid cloud.

- Using Jupyter Notebooks to manage ML training jobs.

- Launching of validation models.

- Deploying and scaling ML models and computing resources.

- Combine ML code from different libraries.

- Tune and optimization of Hyperparameters.

- Research and Development of more sophisticated ML models.

Highlights

Project Background

- Project: Kubeflow

- Author: Google

- Initial Release: 2018

- Type: Workflow Engine

- License: Apache 2.0

- Language: Python, Go, JavaScript, HTML, TypeScript, Jsconnet.

- Runs On: Anywhere

- Hardware: Supports CPUs, GPUs, and TPUs

- Twitter: Kubeflow

Main Features

- Supports various architectural use cases

- Scales workloads without much effort

- Works for training and inference

- Built on top of Kubernetes

Prior Knowledge Requirements

- Access tokens (OAuth)

- Anthos Config Management (Config Controller)

- Environment variables

- Kpt

- Kubernetes CRDs

- Kubernetes operators

- Kustomize

- Linux CLI

- Makefiles

- Source: Scientific Article

Community Benchmarks

- 162,200 Stars

- 2,100 Forks

- 261+ Code contributors

- 75+ Releases

- Source: GitHub

Releases

- V1.6.0 (9-7-2022): Fixes and upgrades: Expose notebook idleness in Notebook management web app.

- V1.5.0 (3-10-2022): Fixes and upgrades: Notebooks – Extend Notebook Controller to expose idleness for Jupyter.

- V1.4.0 (10-11-2021): Fixes and upgrades: Jupyter web app fix for autoscaling GPU nodegroups.

- V1.3.0 (5-27-2021): Stable release for components owned by WG-Notebooks.

- Source: Releases GitHub

References

[1] Bisong, E., & Bisong, E. (2019). Kubeflow and kubeflow pipelines. Building Machine Learning and Deep Learning Models on Google Cloud Platform: A Comprehensive Guide for Beginners, 671-685.

[2] Köhler, A. (2022). Evaluation of MLOps Tools for Kubernetes: A Rudimentary Comparison Between Open Source Kubeflow, Pachyderm and Polyaxon.

[3] Amy Unruh. (2018). Getting started with Kubeflow, Google.

[4] Kubeflow. https://www.kubeflow.org/